Let's see together what the robots.txt and the meta tags Noindex Nofollow

Search engines use spiders to scan websites. To take full advantage of the potential offered by search engines, it is essential to know how to communicate with spiders. In fact, sometimes it may be necessary to prevent them from indexing certain pages.

The use of the robots.txt and meta tags Noindex Nofollow They help us with this.

However, one must be careful how one uses them since they are many different tools that serve different purposes.

The robots.txt file is the one that allows us to manage the directions to be given to search engine spiders that crawl the site. Through the command Disallow robots.txt tell spiders to block crawling on a page or the entire site.

Metatgs Noindex Nofollow Instead, they act on individual pages and prevent indexing of the scanned page (noindex) and links (nofollow).

In a nutshell then we can say that the robots.txt file acts at the crawling level while the Noindex Nofollow meta tags act at the indexing level.

The Disallow robots.txt command acts on the scan

The Disallow command to be entered in Robots.txt gives precise directives to search engine spiders and should be used very judiciously also because it is one of the most important steps for indexing SEO of a website.

Indeed, the robots.txt file, by restricting access to certain areas of the site, lightens the scanning process. In a website with a large amount of content scanning all folders and subfolders etc. can be a very burdensome operation for spiders that penalizes the performance of the portal. The robots.txt file comes into operation to avoid this inconvenience.

Beware, however, of the pages to which you apply the disallow command. The operation must be very judicious taking care to select only those pages that are not important for SEO purposes. This will reduce the load on the server and speed up the indexing process.

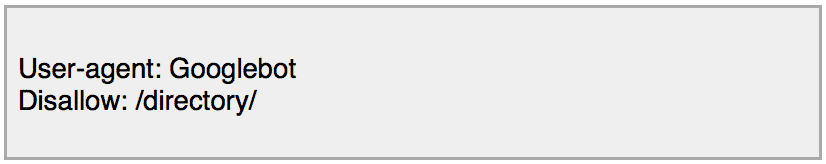

The robots.txt file consists of two fields: the "User-agent" field and one or more "Disallow" fields.

- User-agent is used to indicate to which spider the directives are addressed

- Disallow is used to indicate to which files and/or directories the spider previously indicated not can access.

Part of code to be used in robots.txt for User-Agents

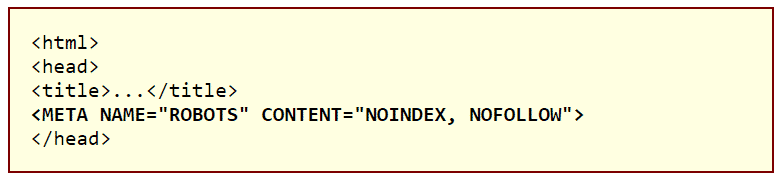

Noindex Nofollow meta tags affect indexing

The Noindex meta tag acts at the indexing level. When spiders scan the page and find the Noindex meta tag they remove it from their index and the page will not be able to appear in search results.

But why might it be useful to deindex some pages of our website? The noindex SEO with a view to optimization is to be applied to all those pages that might be of little interest to search engines such as duplicate pages, off topic pages, or even tag pages, those explaining the site policy, or pages containing brief service information. Search engines consider these pages as spam engine and if their number is high, the whole website can be penalized or downgraded.

The Nofollow meta tag also affects indexing but is specific to links. In this case spiders do not index links marked with the nofollow attribute and, again with a view to good ranking, nofollow is used to avoid passing part of one "s ranking to the linked external site.

HTML example of nofollow noindex

Even in the case of paid links, it is always preferable to include a Nofollow, because search engines do not like it when we get paid to link to a site. Same for banner ads that link to other sites.

Through the Noindex Nofollow meta tags pages and links cease to exist only for search engines while they remain available for users' reference.

Disallow and noindex: never use them together

The robots.txt file and Noindex Nofollow meta tags are very useful tools for creating an effective website. Fully understanding the difference between these commands can help us avoid very common mistakes.

When you apply the Disallow directive the page is not crawled. If the Noindex meta tag is also added to the same page the spiders will not be able to read it since they do not have access to crawl the page. Using them together is a serious mistake.

In fact, in cases like these we run into the situation whereby an unscanned page can still be indexed, because spiders do not have access to read the Noindex command.

So it is useful to repeat that when you want to explicitly block a page from being indexed, you use the Noindex meta tag and must allow crawling for the tag to be recognized and executed.

A similar situation can also happen when a page that we have banned for crawling is linked from other websites or shared on social networks. In fact, if a URL is blocked by robots.txt but a page contains a link to this URL, a situation can arise whereby a result comes up in the SERP with no title and no snippet, causing a bad user experience for the user.

Using these commands judiciously and being clear about what they are being applied for will allow us to avoid being penalized and by search engines and our users!

Now that you understand the function of robots.txt and Noindex Nofollow schedule changes to your website as soon as possible so that you can further boost its optimization. If you have concerns about specific situations you are facing on your portal, please write in the comments.